In the last activity, we used the average rgb of the image to either classify it as a basketball or football. We did not put into account of other images such as baseballs, or ping pong balls. Thus the method was rather crude.

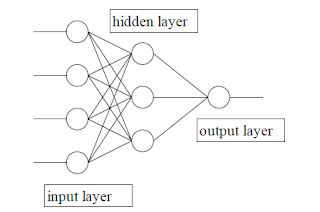

In a Neural Network, one can ideally train the computer to respond to certain "stimulus" or characteristics/parameters of the image being process and label or flag it properly. The input or the "stimulus" activates the hidden response which is the weight. The weight is corrected by the test samples properly.

|

| Figure 1: Diagram of a simplified Neural Network |

In our activity we have 30 samples, 2 classes (basketball or football), and only 1 feature (subject to change). The shape and sizes of the balls are irrelevant since both are circular and their pixel area vary.

Code Snippet used[1]:

// Simple NN that learns 'and' logic

// ensure the same starting point each time

rand('seed',0);

// network def.

// - neurons per layer, including input

//2 neurons in the input layer, 2 in the hidden layer and 1 in the ouput layer

N = [2,2,1];

// inputs

x = [1,0;

0,1;

0,0;

1,1]';

// targets, 0 if there is at least one 0 and 1 if both inputs are 1

t = [0 0 0 1];

// learning rate is 2.5 and 0 is the threshold for the error tolerated by the network

lp = [2.5,0];

W = ann_FF_init(N);

// 400 training cyles

T = 400;

W = ann_FF_Std_online(x,t,N,W,lp,T);

//x is the training t is the output W is the initialized weights,

//N is the NN architecture, lp is the learning rate and T is the number of iterations

// full run

ann_FF_run(x,N,W) //the network N was tested using x as the test set, and W as the weights of the connections

//// encoder

//encoder = ann_FF_run(x,N,W,[2,2])

//// decoder

//decoder = ann_FF_run(encoder,N,W,[3,3])

References:

1. Soriano, Jing, Neural Networks, 2013

No comments:

Post a Comment